AI Model Monitoring & AI Governance

AI Model Monitoring & AI Governance.

Satisfy Risk Management & Compliance Requirements by Monitoring Models for Drift, Bias, & Performance (AKA “Explainability”).

Machine Learning Operations (MLOps) provides end-to-end capabilities for deploying, managing, governing, and securing machine learning and other probabilistic models in production. MLOps is a framework for managing machine learning and applying DevOps principles to accelerate the development, testing, and deployment of AI/ML models. Its goal is to help organizations conduct continuous integration (CI/CD), development, and delivery of AI/ML models at scale.

Why AI Model Monitoring & AI Governance?

ModelOps and getting your models into production is just the beginning. Soon, your Compliance & Risk team will be asking you difficult questions. Graduating to a sophisticated AI/ML program means you are prepared for the inevitable audit. But what does that mean?

Sophisticated AI/ML programs monitor their production models for drift, bias, performance, and anomalies, ensuring they identify potential issues and correct them immediately before they become a business problem.

Governing your models, ensuring they are Reliable, Explainable, and Responsible is paramount to the longevity and profitability of your AI/ML program.

Enterprises leverage the following list of features to govern and monitor their AI/ML models in production.

AI Monitoring & AI Governance Features.

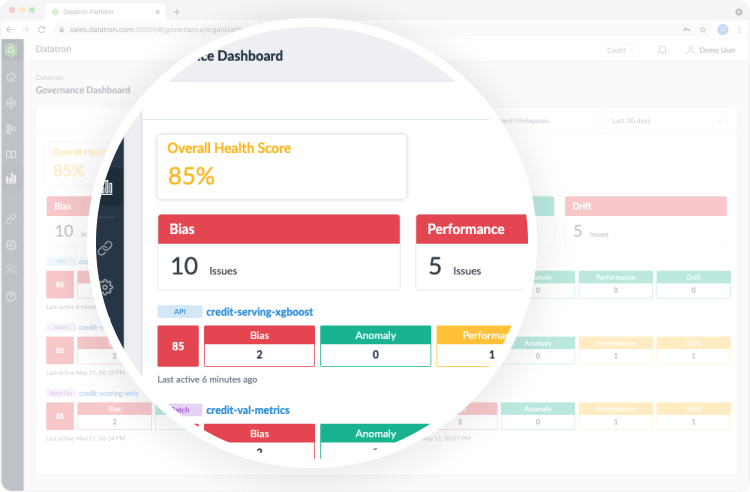

Dashboard.

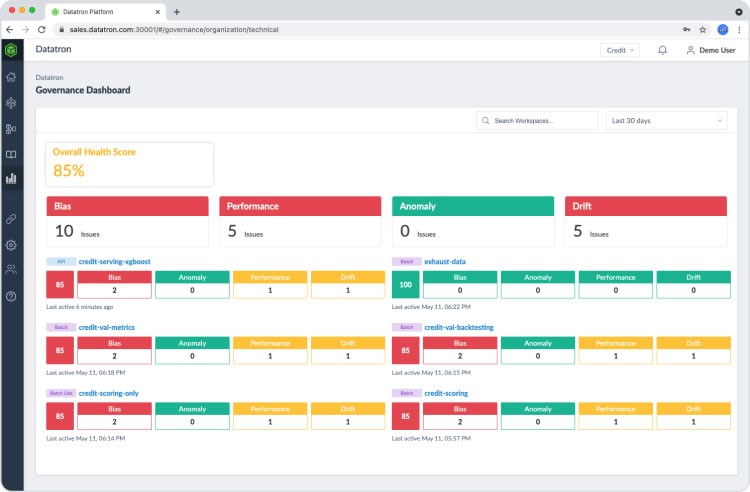

The ability to quickly get an overview of the health of your AI models is a crucial requirement to ensure that you are not exposing your business to unintended risks with severe consequences.

The Dashboard provides a high-level overview of your AI/ML program without having to decipher the nuances of all the AI models running in the environment.

Using metrics and parameters, Data Scientists and ML Engineers can further investigate models, empowering technical teams to make better decisions based on insights.

For the DevOps/IT/Engineering teams, Amerivet provides infrastructure monitoring as an out-of-the-box feature for all machines in a cluster. Activities monitored include CPU usage, memory usage, health check, and more.

Overall Health Score.

A proprietary “Health Score” provides an intuitive, easy-to-understand measure of the overall health of all the models in the system.

Bias, Drift, Performance, Anomaly Detection.

Bias

Bias is calculated as the difference of a particular variable’s distribution between a group of interest and the rest of the population.

Datatron supports bias monitoring in four scenarios: - Regression without feedback - Regression with feedback - Classification without feedback - Classification with feedback

Drift

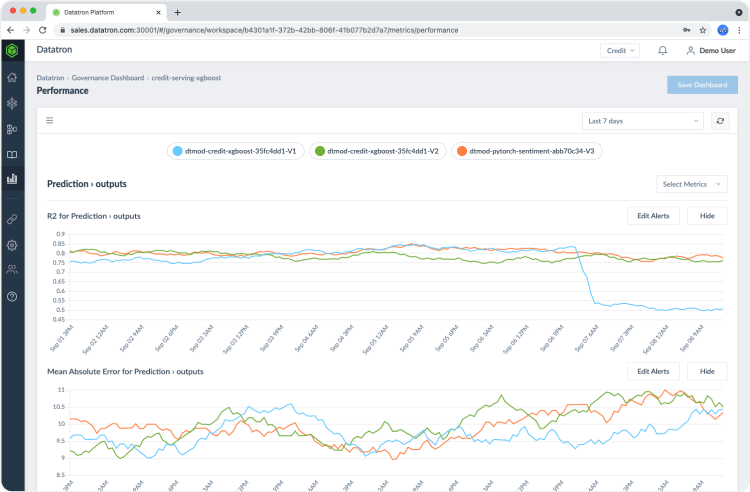

- Data Drift – Also known as covariate shift, is changed after a model is deployed. Each data point lives in a high dimensional space whose dimension is defined by the number of input features. If data drift occurs after the model is deployed, the new data points will likely come from a region of the input space less populated by training data.

- Concept Drift – Concept drift occurs when the decision boundary changes, and is often observed in time-series or streaming data.

Performance

Anomaly Detection

Amerivet’s proprietary anomaly detection mechanism identifies potential issues via an ensemble approach by gathering data and metrics from multiple sources, including model performance, bias, drift, model logs, system logs, and more. Thus enabling Amerivet to more swiftly and accurately identify potential issues not found in traditional metrics calculations.

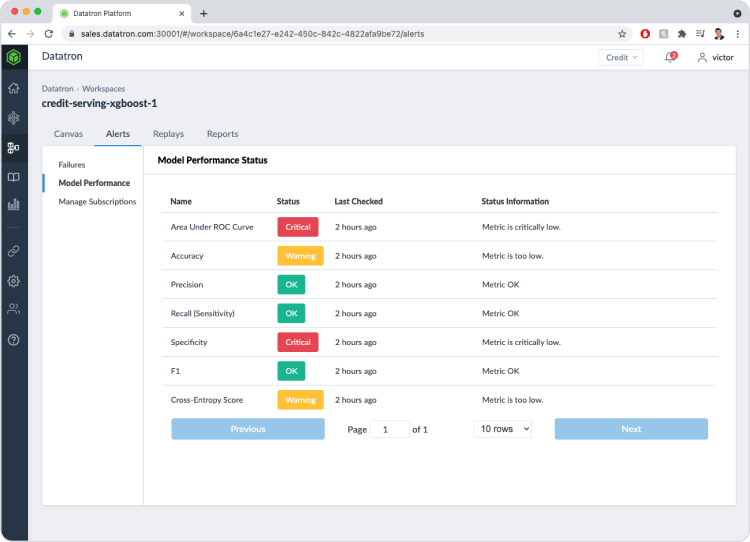

Alerts

Customers can set alerts and/or automatic shutdown defaults triggered when the model varies from pre-defined performance thresholds. These notification mechanisms act as a continuous feedback loop – when the KPI is impacted, the platform notifies the user.

You can select the type of monitoring and how to be notified, such as via email, Slack, PagerDuty, or other methods. Or, if you prefer, leverage Datatron’s API to integrate with your own preferred alerting tools

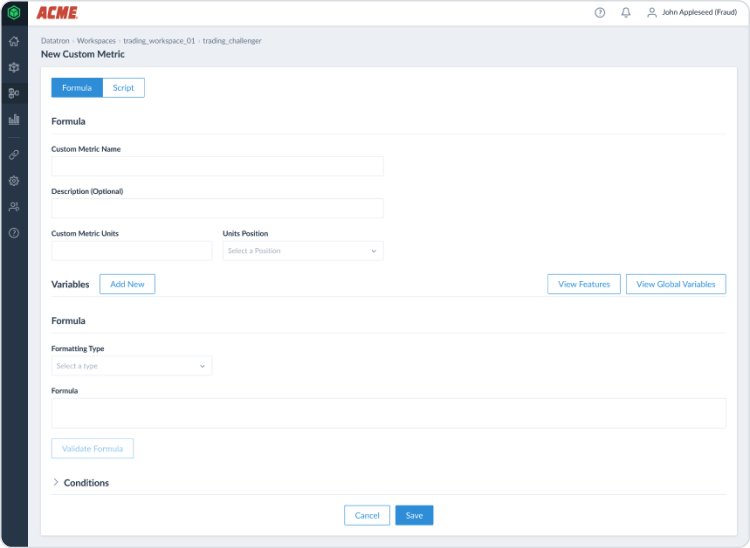

Custom Metrics

Monitoring and reporting can be customized based on an organization’s KPI metrics. Datatron provides easy connection mechanisms to pull data from customer data sources.

Activity Log & Audit Trail

Activity Log

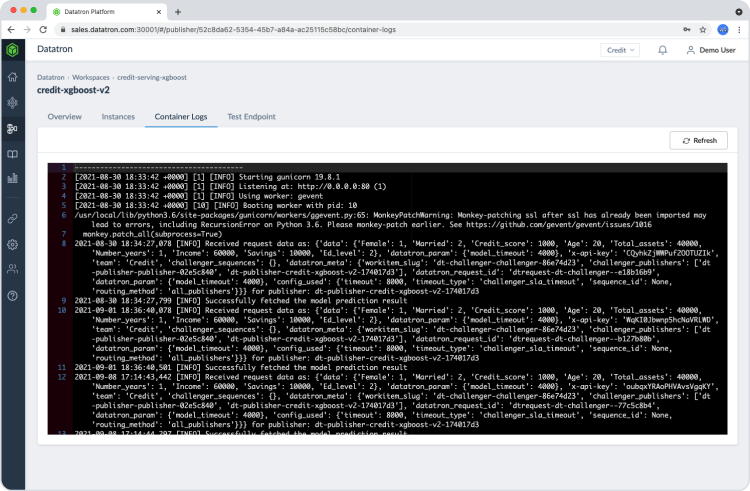

Amerivet maintains thorough information on models and datasets, such as versioning, history, and user information. Amerivet can efficiently track down old information for review. Amerivet also maintains complete logs of request responses for all models.

Audit Trail

Leverage logs generated by Datatron to produce a complete audit trail. Users can now observe how their system behaved at a given point in time, as well as the circumstances around the system’s behavior, dramatically accelerating the complete audit process. Go back in time to narrow down the model used for a particular prediction, along with the corresponding information:

- Dataset used to train the model

- Version of the model deployed

- Feature vector of the model

- Model prediction value

- User who approved deployment of model

- Time of deployment

- Comments on discussion and reviewer forums at the time of model deployment

Certifications

"Gain confidence in our expertise with our industry-recognized certifications."